Facial expressions play an important role in recognition of emotions and are used in the process of non-verbal communication.

Just like you hear your mother’s certain tone of voice---yes, *that* voice---you can quickly indicate a person’s feelings via their facial expression.

This helps describe a person’s emotional state. Facial expressions assist us in ascertaining a person’s emotions and frame of mind.

Researchers and scientists utilize facial expression detection in exploring the field of emotion recognition.

Much of the research focuses on recognizing seven basic emotional states: neutral, joy, surprise, anger, sadness, fear, and disgust.

Is facial expression detection the same as facial recognition?

They are two different fields, though they are related to each other. Hence why they’re often mistaken for each other.

Much like the words “emoticons” and “emojis” are switched for each other. Or how “cement” and “concrete” are used interchangeably.

FYI: Emoticons are the faces you create by typing different characters on your keyboard; emojis are real pictures, like faces or animals. Concrete is a strong building material combined of several materials, held together by cement.

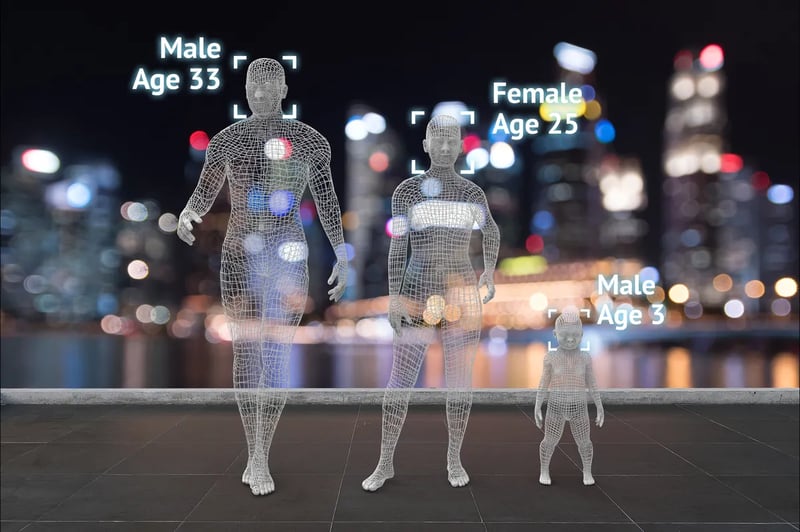

Facial expression detection observes and analyzes human behavior and emotions. Facial recognition, on the other hand, uses biometric software to map out an individual’s facial features, storing it as a faceprint.

The relationship of expressions to emotion is very nuanced, complex and not prototypical. It goes beyond facial expressions, but includes body language and voice tonality.

What is facial expression detection (FED)?

Facial expression detection uses biometric markers to detect emotions in human faces. It analyzes emotional sentiment and detects the seven basic or universal expressions. Paul Ekman, an American psychologist, pioneered facial expression detection.

He created an “atlas” of over 10,0000 emotions, including “microexpressions”. He also went on to develop a helpful tool, the Facial Action Coding System. The FACS is an anatomically based system for describing all observable facial movement for every emotion.

Why is detecting facial expression important?

Facial expressions are among other gestures that are part of nonverbal communication. Computer-based facial expression detection’s aim is to correctly mimic how humans interpret these subtle cues. These cues are essential to interpersonal interactions as they give additional information to spoken words.

They’re what we rely on to know when we take too long to respond to an interview question or that our friend is actually upset about his breakup, despite what he says.

For remote recruiting and hiring, facial expression detection is useful in painting a broader picture of a person. A resume can only tell so much. A video add-on to the interviewing process is useful in highlighting the candidate’s persona. As Yi Xu, CEO of Human stated, “we are likely to make recruitment decisions based on chemistry, mood or context rather than on skills, suitability for the role or level of emotional intelligence”.

How does facial expression detection work?

Using an image or video, it extracts and analyzes expression information. With ample amounts of unbiased emotional responses as data, technology can detect the seven universal expressions. It often scans for facial expressions, voice, and body language to provide greater insight into a person’s emotional state and behavior.

Is there bias in facial expression detection?

Bias has long plagued facial recognition algorithms. Neural networks train on different numbers of faces from different groups of people. However, with facial expression, AI is solely looking for emotion and behavior---not the individual’s faceprint.

Therefore if a bias does exist, it’s more likely to misdiagnose only when it doesn’t have ample samples of a person smiling or wrinkling their nose. The bias is less “high stakes” than facial recognition. In facial recognition, if a software is incorrect, they’re guessing the wrong person. In facial expression detection, it’s about a specific facial expression, rather than an individual person.

Facial expression detection is about understanding the person---not simply visualizing the person.

Improve your employees' skills with Retorio AI coaching platform. Click below to learn more.